Data Virtualization for Mainframes: Integrating z/OS With Modern Analytics and AI Systems

23.01.2024

The enterprise technology landscape stands at a critical crossroads. While artificial intelligence and cloud computing dominate headlines, thousands of organizations quietly grapple with a less glamorous but equally consequential challenge: what to do with decades-old COBOL applications that still power critical business operations. These legacy systems, built when mainframes were the pinnacle of computing power, continue processing trillions of dollars in transactions daily. Yet they represent an increasingly precarious foundation for modern business.

According to research from IBM, approximately 220 billion lines of COBOL code remain in active production today, processing an estimated 95% of ATM transactions and handling 80% of in-person credit card purchases worldwide. The financial services industry alone depends on COBOL for core banking operations, insurance claim processing, and regulatory compliance systems that simply cannot afford downtime or data loss.

The pressures facing these organizations in 2025 have reached a tipping point. Digital transformation is no longer optional—customers expect mobile-first experiences, real-time transaction processing, and seamless integration across platforms. Cloud adoption has become a strategic imperative, with Gartner predicting that by 2025, over 85% of enterprises will embrace a cloud-first principle. Meanwhile, the talent crisis deepens: the average COBOL programmer is approaching retirement age, and universities stopped teaching the language decades ago. The collision of technological evolution and workforce attrition has created an urgent mandate for modernization.

This comprehensive guide explores how enterprises are successfully navigating the journey from mainframe-bound COBOL applications to modern, cloud-native architectures. We'll examine proven strategies, dissect the technical challenges, and provide actionable frameworks for organizations embarking on their own modernization initiatives.

The business case for COBOL modernization extends far beyond technical debt. Organizations face mounting pressure from multiple directions that make maintaining the status quo increasingly untenable.

Market Agility and Competitive Disadvantage: Legacy COBOL systems were designed for stability, not flexibility. Adding new features or products can take months in a mainframe environment, while cloud-native competitors iterate in days or weeks. Financial institutions watching fintech startups rapidly deploy new services understand that technology architecture directly impacts competitive positioning. The monolithic nature of COBOL applications means that a simple change to one module can require extensive regression testing across the entire system, creating bottlenecks that slow innovation to a crawl.

Customer Experience Expectations: Modern consumers expect omnichannel experiences—starting a transaction on mobile, continuing on web, and completing in-branch with full continuity. Legacy systems struggle with these integration demands. API-first architectures and microservices enable the real-time, personalized interactions that customers now consider standard. COBOL systems, often batch-oriented and built before APIs existed, require extensive middleware layers just to expose basic functionality to modern channels.

Regulatory Compliance and Risk Management: Regulatory requirements have grown exponentially more complex since most COBOL systems were designed. Real-time fraud detection, instant regulatory reporting, and comprehensive audit trails strain systems architected for overnight batch processing. NIST cybersecurity frameworks emphasize zero-trust architectures and continuous monitoring—concepts difficult to implement in legacy environments. The inability to quickly adapt to new regulations creates both compliance risk and competitive disadvantage.

The technical debt accumulated over decades creates operational vulnerabilities that grow more severe with time.

Catastrophic Knowledge Loss: The COBOL talent pool is shrinking rapidly through retirement, with few young developers entering the field. Organizations face "knowledge cliffs" where critical system understanding exists only in the minds of a handful of employees. When these individuals leave, they take with them undocumented business rules, workarounds, and tribal knowledge essential for system maintenance. This creates single points of failure that threaten business continuity.

Hardware Dependencies and Vendor Lock-In: Mainframe hardware represents massive capital investments with limited flexibility. Organizations remain locked into specific vendors, often IBM, paying premium prices for hardware refreshes and software licensing. As mainframe expertise becomes scarcer, vendor negotiations become increasingly one-sided. The specialized nature of mainframe operations—from unique operating systems to proprietary database systems—makes migration appear daunting, perpetuating the lock-in.

Integration Complexity: Modern digital ecosystems demand seamless integration with cloud services, mobile applications, analytics platforms, and third-party APIs. Legacy COBOL systems were never designed for this level of interconnection. Organizations build complex middleware layers, creating what amounts to a "spaghetti architecture" of point-to-point integrations that become increasingly difficult to maintain and understand. Each new integration adds another layer of technical debt.

While mainframe advocates argue that MIPS costs have declined, the total cost of ownership tells a different story. Mainframe operations include hardware leasing or ownership, specialized cooling and power requirements, vendor software licensing often based on peak utilization, and costly disaster recovery infrastructure. These fixed costs don't scale down during periods of lower demand, unlike cloud infrastructure from AWS and other providers that can be right-sized dynamically.

COBOL programmer salaries have inflated as scarcity increases bargaining power. Organizations often pay premium rates for contractors to fill gaps, sometimes engaging the same retired developers as consultants at multiples of their former salaries. The specialized skills required for mainframe system administration, database management, and networking further drive up personnel costs. Unlike modern cloud platforms where skills are abundant and training resources plentiful, mainframe expertise commands ever-higher premiums.

Perhaps most significant are the opportunities foregone due to technology constraints. New product development that would generate revenue is delayed or abandoned because underlying systems can't adapt quickly enough. Business intelligence initiatives struggle because data remains trapped in VSAM files or hierarchical databases. The inability to leverage modern technologies like artificial intelligence, machine learning, and advanced analytics represents a strategic disadvantage that compounds over time.

Universities phased out COBOL from curricula in the 1990s and 2000s, viewing it as obsolete. This created a generational gap: programmers under 40 rarely have COBOL experience, while those with expertise approach retirement. During the COVID-19 pandemic, several U.S. states scrambled to find COBOL programmers to maintain unemployment benefits systems overwhelmed by unprecedented claim volumes—a preview of the broader crisis organizations face. The cognitive overhead of maintaining decades-old code without modern development practices compounds the problem. Legacy COBOL applications often lack comprehensive documentation, automated testing, or version control.

Organizations embarking on COBOL modernization face a spectrum of strategic options, each with distinct trade-offs between risk, cost, timeline, and business value. Understanding these approaches enables informed decision-making aligned with organizational capabilities and objectives.

Rehosting represents the lowest-risk, fastest path to moving off physical mainframe hardware by migrating applications to x86 servers or cloud virtual machines with minimal code changes.

How It Works: Organizations use mainframe emulation software to recreate the mainframe environment on commodity hardware or cloud infrastructure. The COBOL code, JCL (Job Control Language), and associated programs run largely unchanged within this emulated environment. Tools from vendors like Micro Focus or LzLabs enable COBOL applications to execute on Linux or Windows servers.

Advantages: Speed is the primary benefit—migrations can complete in months rather than years. Risk remains relatively low since the application logic doesn't change, reducing the likelihood of introduced defects. Organizations immediately achieve hardware cost savings by moving off expensive mainframe hardware while eliminating vendor lock-in. This approach provides breathing room to plan more comprehensive modernization while solving immediate cost or capacity constraints.

Disadvantages: Organizations realize limited business value beyond cost reduction. The application remains architecturally obsolete—still monolithic, still difficult to integrate with modern systems, still challenging to enhance. Development productivity doesn't improve meaningfully. Performance characteristics may change, sometimes negatively, depending on the emulation layer efficiency. Organizations essentially perpetuate technical debt while changing the underlying hardware platform.

Best Fit: Rehosting works well for applications nearing end-of-life that don't justify modernization investment, or as a tactical first step in a phased modernization journey. It's also appropriate when organizations face immediate mainframe capacity constraints or expiring hardware leases and need a quick solution.

Cost Considerations: While lower than other approaches, costs still include emulation software licensing, infrastructure setup, migration tooling, and extensive testing. Budget between $500,000 to $3 million for mid-sized applications, with timelines of 6-18 months.

Replatforming involves moving applications to a new platform while making some modifications to take advantage of cloud capabilities without completely rewriting code.

How It Works: Organizations might containerize COBOL applications using tools like Red Hat OpenShift, enabling cloud deployment while maintaining the COBOL codebase. They might refactor batch jobs into scheduled cloud functions, or replace mainframe databases with cloud-native alternatives while keeping business logic intact. The code undergoes selective modifications rather than wholesale replacement.

Advantages: This approach balances risk and reward better than pure rehosting. Organizations gain access to cloud scalability, modern DevOps practices, and improved disaster recovery capabilities. Integration with cloud services becomes easier. Development teams can begin working with modern tools and platforms even while maintaining COBOL code. The incremental nature allows organizations to build cloud competency gradually.

Disadvantages: Applications remain partially constrained by legacy architecture. Technical debt isn't fully addressed, just redistributed. The hybrid approach can create complexity, as teams must maintain expertise in both legacy and modern technologies. Performance optimization often requires additional work since the code wasn't originally designed for the target platform. Organizations may face "stuck in the middle" scenarios where they've invested significantly but haven't achieved full modernization benefits.

Best Fit: Replatforming suits organizations with applications that must remain operational long-term but have critical components that need cloud capabilities. It's effective when specific bottlenecks like database performance or batch processing windows drive the initiative rather than complete application replacement.

Cost Considerations: Budget 1.5-3x rehosting costs, typically $1-5 million for mid-sized applications with 12-24 month timelines. The variability depends heavily on how much code requires modification.

Refactoring involves automated or semi-automated transformation of COBOL code into modern programming languages while preserving business logic and functionality.

How It Works: Organizations use code transformation tools that parse COBOL source code, analyze business logic, and generate equivalent code in target languages like Java or C#. AWS Mainframe Modernization offers automated refactoring services, as do vendors like Blu Age and TSRI. These tools map COBOL data structures to object-oriented classes, translate procedural logic to modern paradigms, and generate frameworks for database access and transaction management.

Advantages: Applications emerge in modern languages that abundant developers understand, dramatically improving long-term maintainability. The code can leverage modern frameworks, development tools, and cloud-native services. Organizations gain flexibility to evolve applications using contemporary practices like microservices, containers, and continuous deployment. The talent pool expands enormously when moving from COBOL to Java or .NET. Applications become easier to integrate with modern systems and services.

Disadvantages: Automated refactoring rarely produces optimal code—it generates functional equivalents of procedural COBOL logic rather than well-architected object-oriented designs. The resulting code often requires significant cleanup and restructuring. Subtle business logic embedded in COBOL idioms may not translate perfectly, requiring extensive testing. Organizations must build entirely new deployment and operational procedures. The transformation itself carries risk, as extensive testing is required to ensure behavioral equivalence.

Best Fit: Refactoring works best for applications with well-structured COBOL code, comprehensive documentation, and existing test suites that enable validation. It's ideal when organizations want to preserve business logic exactly while modernizing the technology stack. Applications with stable requirements benefit most, as the substantial refactoring investment assumes long future lifespans.

Cost Considerations: Expect $3-8 million for mid-sized applications with 18-36 month timelines. Automated refactoring tools reduce some costs, but testing, validation, and code optimization remain labor-intensive. The total cost of ownership decreases over time as maintenance becomes easier.

Complete application rewrites involve building new systems using modern architectures, languages, and cloud-native design patterns while reimplementing business functionality.

How It Works: Organizations analyze existing COBOL applications to extract business requirements, then design and build cloud-native replacements from the ground up. Teams employ microservices architectures, containerization, API-first designs, and modern development practices. Business logic is reimagined for current needs rather than mechanically ported.

Advantages: This approach delivers maximum architectural flexibility and optimization. Applications can be designed specifically for cloud environments, leveraging serverless computing, managed services, and elastic scalability. Organizations eliminate all technical debt and can simplify or eliminate obsolete functionality discovered during requirements analysis. The resulting systems align with contemporary best practices and can incorporate modern capabilities like AI/ML from inception. Development teams work entirely with modern technology stacks.

Disadvantages: Rewriting represents the highest-risk approach. Extracting complete, accurate requirements from decades-old COBOL code proves extraordinarily difficult—undocumented business rules, edge cases, and implicit assumptions are easily overlooked. Projects frequently experience cost overruns and timeline delays. Organizations must maintain parallel systems during development, running both old and new concurrently. The sheer scope of reimplementing millions of lines of business logic creates project management challenges. Many high-profile rewrite projects have failed spectacularly, sometimes forcing organizations back to their legacy systems after years of investment.

Best Fit: Rewrites make sense when legacy applications have become so constrained that incremental modernization can't address fundamental architectural limitations. They're appropriate when business requirements have evolved dramatically from the original design, making preservation of existing logic counterproductive. Rewrites work better for smaller, well-bounded applications rather than massive, interconnected systems.

Cost Considerations: Budget $5-20+ million for substantial applications with 24-60 month timelines. The wide range reflects extreme variability in complexity and scope. Many rewrite projects exceed their original budgets by 50-200%.

Organizations can replace custom COBOL applications with modern commercial software packages or Software-as-a-Service solutions that provide equivalent business functionality.

How It Works: Rather than modernizing legacy code, organizations implement pre-built solutions. Core banking systems, insurance policy administration platforms, and ERP systems from vendors eliminate the need for custom development. These packages offer modern interfaces, cloud deployment, and regular updates managed by vendors.

Advantages: Organizations offload ongoing development and maintenance to software vendors, dramatically reducing technical staff requirements. Modern packages include features and capabilities that would take years to develop internally. Regulatory compliance updates arrive as part of standard releases. Implementation timelines can be shorter than custom development. Organizations benefit from vendor R&D investments and industry best practices embedded in the software.

Disadvantages: Commercial packages rarely match custom applications feature-for-feature, requiring business process changes that may encounter organizational resistance. Configurations and customizations needed to fit organizational needs can be expensive and create upgrade complications. Vendor lock-in replaces mainframe lock-in—organizations become dependent on the new vendor's roadmap and pricing. Data migration from proprietary mainframe formats to new systems remains complex and risky.

Best Fit: Replacement works well for commodity business functions where custom solutions don't provide competitive advantage. Back-office functions, accounting systems, and standardized processes are good candidates. This approach suits organizations wanting to focus technical resources on differentiating capabilities rather than maintaining infrastructure applications.

Cost Considerations: Licensing costs vary widely but plan for $1-10+ million in software costs plus $2-8 million in implementation services for enterprise-scale deployments. Timeline expectations run 12-36 months. Ongoing subscription fees must be factored into total cost of ownership.

Rather than replacing COBOL applications, organizations can encapsulate them behind modern API layers, exposing functionality to new channels while preserving core logic.

How It Works: Tools like IBM z/OS Connect enable organizations to create RESTful APIs that invoke mainframe transactions and access data. The COBOL code remains unchanged while becoming accessible to mobile apps, web services, and cloud applications through standard API protocols. Organizations essentially treat the mainframe as a services backend.

Advantages: This low-risk approach extends legacy system life by making them accessible to modern applications. Implementation is relatively quick and inexpensive. Business logic remains in proven, stable code while new user experiences can be developed rapidly. Organizations can incrementally migrate functionality—exposing APIs initially, then gradually replacing backend logic over time.

Disadvantages: The underlying technical debt and operational challenges persist. Organizations still depend on mainframe infrastructure and COBOL expertise. Performance bottlenecks in legacy systems constrain the entire ecosystem. This approach doesn't solve long-term sustainability issues—it merely delays them while creating additional integration complexity.

Best Fit: API enablement works as a tactical interim strategy while planning comprehensive modernization, or for applications requiring limited external access. Budget $250,000-$1.5 million for establishing API infrastructure with 4-12 month timelines.

Successful modernization requires methodical planning and execution. The following framework provides a proven approach for navigating the technical and organizational complexities inherent in legacy transformation.

Comprehensive understanding of the existing estate forms the foundation for all subsequent decisions. Organizations that rush past this phase invariably encounter expensive surprises during implementation.

Critical Assessment Activities:

Pay special attention to mainframe file formats. VSAM files, QSAM sequential files, and other mainframe-specific structures need mapping to cloud-native alternatives. Document field-level details: COBOL PICTURE clauses, COMP-3 packed decimal fields, and EBCDIC encoding all require handling during migration. Data dictionaries and copybooks provide essential metadata but may be incomplete or outdated.

Understanding how programs interact is crucial for planning migration sequences. Database dependencies require special attention—catalog which programs access which files or database tables, and map how data flows through the system. Understanding read versus write patterns helps identify potential race conditions or data consistency issues that might surface in cloud environments with different concurrency characteristics.

Build a traceability matrix linking code components to business capabilities. This enables risk assessment: which modernization failures would disrupt critical business processes versus which affect minor convenience features. The matrix also supports phased migration planning by grouping functionality logically.

The major cloud providers each offer mainframe modernization services with different strengths, and the choice profoundly impacts architecture, tooling, and operational procedures.

AWS Mainframe Modernization: Provides both automated refactoring and replatforming options. The automated refactoring service uses Blu Age technology to transform COBOL into Java running on AWS services. The replatforming option, powered by Micro Focus, enables COBOL applications to run in managed runtime environments on AWS infrastructure. AWS strengths include mature DevOps tooling, extensive service catalog, and strong ecosystem of partners experienced in mainframe migrations. Organizations already using AWS for other workloads benefit from unified cloud operations.

Microsoft Azure Mainframe Migration: Microsoft Azure offers comprehensive mainframe transformation services including partnerships with Micro Focus, Asysco, and other vendors. Azure's strength lies in organizations already invested in Microsoft technology stacks—seamless integration with Active Directory, SQL Server, .NET development frameworks, and Microsoft 365 creates compelling synergy. Azure provides strong tools for lifting and shifting mainframes to Azure infrastructure with minimal changes, then incrementally modernizing over time.

Google Cloud Mainframe Solutions: While Google Cloud's mainframe-specific offerings are less extensive than AWS or Azure, they provide strong fundamentals including partnerships with vendors like Astadia for automated mainframe migration. Google Cloud's strengths lie in data analytics and AI/ML capabilities, making it attractive for organizations prioritizing advanced analytics on modernized data. BigQuery provides exceptional capabilities for analyzing mainframe data at scale.

The platform decision should consider existing IT investments, staff expertise, required certifications and compliance frameworks, and specific modernization strategy. Organizations refactoring COBOL to Java might prefer AWS's mature Java ecosystem, while those maintaining COBOL in containers might favor Azure's enterprise integration or Google's Kubernetes leadership.

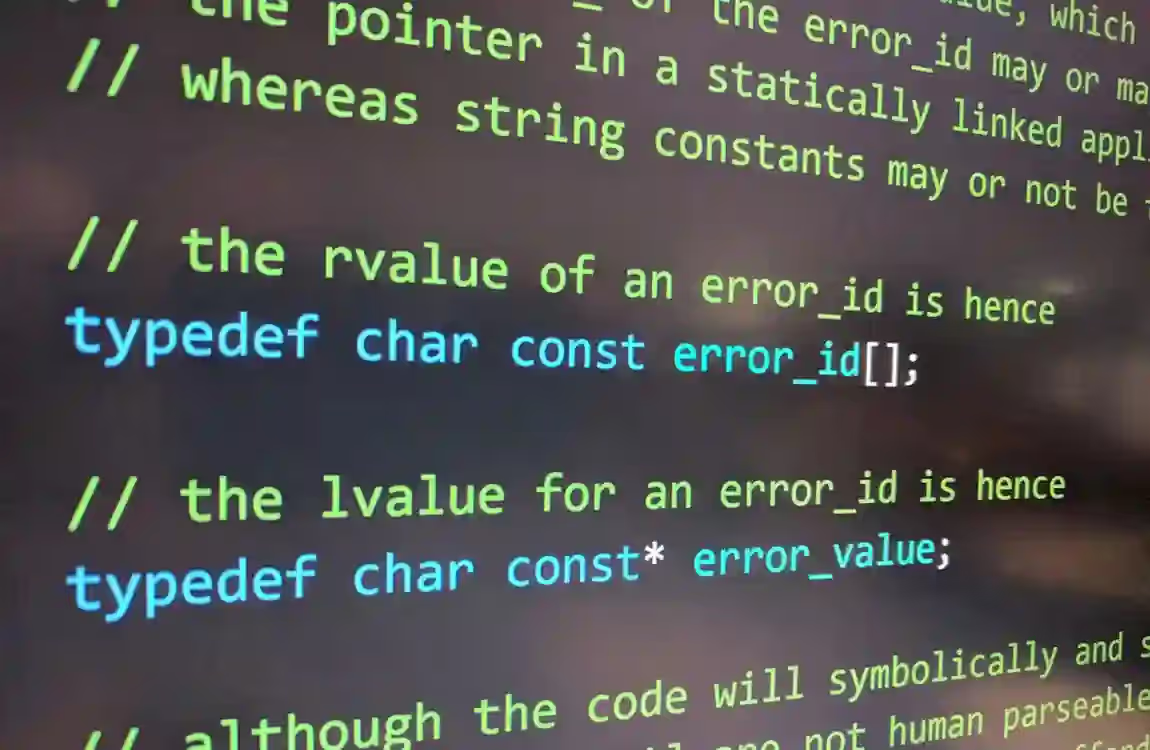

When refactoring approaches are chosen, the actual code transformation demands careful planning and execution to preserve business logic while modernizing the technology stack.

Modern refactoring tools parse COBOL source code and generate equivalent Java code. These tools map COBOL data divisions to Java classes, procedure divisions to methods, and COBOL verbs to Java statements. They generate frameworks for file I/O, database access, and transaction management.

However, automated transformation has limitations. The generated code is often verbose and procedural rather than idiomatic object-oriented Java. COBOL's paragraph structure doesn't map cleanly to object-oriented design principles. The tools generate code that works but isn't necessarily maintainable or performant.

Organizations must plan for post-transformation refactoring to improve code quality, applying object-oriented design principles, extracting reusable components, and optimizing performance. Some organizations choose selective manual refactoring, particularly for critical or complex components. This approach requires deep understanding of both COBOL and target language idioms. A hybrid approach often works best: automated transformation for bulk conversion, followed by manual optimization of critical paths.

Testing Transformed Code: Validating that transformed code behaves identically to COBOL originals represents perhaps the most critical and challenging aspect of code transformation. Organizations need multiple testing layers: unit tests verify individual program behavior, integration tests verify that modernized components interact correctly with remaining legacy systems, performance testing ensures response times meet requirements, and regression testing validates that existing functionality remains intact.

Some organizations maintain mainframe emulators in test environments to enable side-by-side comparison. Production-like data volumes stress-test transformed applications. Load testing reveals scalability characteristics that may differ significantly from mainframe behavior.

Data represents the most valuable and risky aspect of modernization. Poor data migration planning has derailed numerous projects that successfully transformed code but corrupted or lost critical business data.

VSAM to SQL Database Migration: Virtual Storage Access Method datasets are the primary data storage mechanism for mainframe COBOL applications. These keyed file systems must be migrated to relational databases or cloud-native data stores. The first challenge is understanding VSAM file structures. COBOL copybooks define record layouts, but business relationships between files often exist only in application logic. Normalizing this data into proper relational schemas requires careful analysis.

Data type mapping requires attention. COBOL's packed decimal fields, EBCDIC text encoding, and binary data structures need conversion to database-native types. Organizations must handle NULL values, which COBOL often represents through special values or indicator fields rather than formal NULL concepts. Key structure and indexing strategies need reconsideration—VSAM alternate indexes might map to database indexes, but cloud database performance characteristics differ from mainframe file systems.

ETL Framework Development: Extract-Transform-Load pipelines move data from mainframe sources to cloud destinations. These pipelines must handle initial bulk migration plus incremental synchronization during parallel operations. Tools like AWS Database Migration Service, Azure Data Factory, or Google Cloud Dataflow provide managed ETL capabilities. Organizations build extraction routines that read mainframe files or databases, transformation logic that converts data formats and performs cleansing, and load procedures that insert data into target systems with proper error handling.

Data validation proves critical. Build automated checks that compare record counts, verify key constraints, and validate business rules. Sample matching between source and target identifies systematic transformation errors. Checksums and hash comparisons detect data corruption.

Cloud-Native Database Selection: Modern cloud platforms offer numerous database options, each optimized for different use cases. Traditional relational databases like Amazon RDS PostgreSQL, Azure SQL Database, or Cloud SQL provide familiar SQL interfaces and ACID transaction guarantees similar to mainframe DB2. NoSQL databases offer different trade-offs—document databases suit applications with flexible schemas, while key-value stores provide extreme scalability for simpler data models.

Handling Data Synchronization During Parallel Operations: Most migrations require running old and new systems in parallel during cutover. This demands bidirectional data synchronization—changes in either system must propagate to the other. Change data capture mechanisms identify and extract changes from source systems. Event streams or message queues transport changes to targets. Conflict resolution logic handles cases where both systems modify the same data.

Time-based cutover strategies minimize synchronization complexity. Organizations schedule cutovers during low-activity periods, freeze the legacy system, perform final data synchronization, and switch users to modernized systems. Rollback plans must account for data created in the new system during any attempted cutover.

Modernization creates opportunities to enhance applications with cloud-native services that would be impractical in mainframe environments.

API Development and Management: Exposing modernized business logic through RESTful APIs enables integration with web applications, mobile apps, and third-party systems. API gateways like AWS API Gateway, Azure API Management, or Google Cloud Endpoints provide features like authentication, rate limiting, request transformation, and analytics. Well-designed APIs abstract implementation details and enforce contracts between systems.

Security becomes paramount when exposing business logic. OAuth 2.0 and OpenID Connect provide industry-standard authentication and authorization. API keys, client certificates, and network restrictions add additional security layers. Regular security testing identifies vulnerabilities before attackers do.

Serverless Computing Integration: Functions-as-a-Service platforms like AWS Lambda, Azure Functions, or Google Cloud Functions enable running code without managing servers. Certain modernized COBOL routines might be better implemented as serverless functions triggered by events or API calls. Serverless architectures suit event-driven workloads with variable demand. Organizations pay only for actual execution time rather than constantly running servers.

Consider implementing batch job orchestration using serverless components. AWS Step Functions or Azure Durable Functions coordinate complex workflows, replacing JCL job streams with visual state machines. Error handling, retries, and monitoring become more sophisticated than mainframe job schedulers typically offer.

Message Queuing and Asynchronous Processing: Decoupling components through message queues improves resilience and scalability. Services like Amazon SQS, Azure Service Bus, or Google Cloud Pub/Sub enable asynchronous communication between modernized components. Queue-based architectures handle load spikes gracefully—messages accumulate during peaks and process during troughs. Failed operations retry automatically without losing data.

Mainframe applications often had tight synchronous coupling where one program directly called another. Message queues enable looser coupling where services communicate through intermediaries. This flexibility facilitates future evolution and simplifies testing through message injection.

Modern cloud platforms enable sophisticated deployment strategies and comprehensive observability that far exceed mainframe capabilities. Organizations should leverage these capabilities to reduce risk and improve operational excellence.

CI/CD Pipeline Implementation: Continuous Integration and Continuous Deployment pipelines automate building, testing, and deploying applications. Changes committed to version control trigger automated build processes, unit tests, integration tests, and potentially deployment to production. Tools like Jenkins, GitLab CI, AWS CodePipeline, Azure DevOps, or Google Cloud Build orchestrate these workflows.

Pipeline definitions stored as code enable version control and peer review of deployment processes themselves. Automated gates prevent defective code from reaching production. Infrastructure as Code using Terraform, AWS CloudFormation, Azure ARM templates, or Google Cloud Deployment Manager enables reproducible environment creation.

Blue-Green and Canary Deployments: Blue-green deployment maintains two identical production environments, only one actively serving traffic. New versions deploy to the inactive environment, undergo final validation, then traffic switches over. If problems arise, instant rollback returns to the previous version. Canary deployments gradually roll out changes to small percentages of users before full deployment. Monitor key metrics during canary phases—error rates, response times, transaction success rates. If metrics degrade, abort the deployment automatically before all users are affected.

Cloud Monitoring and Observability: Comprehensive observability comprises logging, metrics, and distributed tracing. Cloud platforms provide sophisticated monitoring services like AWS CloudWatch, Azure Monitor, or Google Cloud Operations. Application logs capture detailed information about execution, errors, and business events. Centralized log aggregation enables searching across distributed systems.

Metrics track quantitative measurements: request rates, error rates, latency percentiles, resource utilization. Dashboards visualize system health. Alerts notify operators when metrics exceed thresholds. Distributed tracing follows requests across multiple services, identifying bottlenecks in complex transaction flows.

While many enterprise modernization efforts remain confidential, several organizations have shared their experiences, providing valuable lessons for others embarking on similar journeys.

Major U.S. Insurance Provider: A Fortune 500 insurance company successfully migrated its policy administration system from COBOL to Java over a three-year period. The system processed millions of policies and had been in production for over 30 years. The organization chose automated refactoring using tools from AWS Mainframe Modernization combined with selective manual rewriting of critical components.

The project began with six months of assessment, analyzing 2.8 million lines of COBOL code. They discovered that 35% of programs hadn't been modified in over a decade and another 20% were no longer called by any active workflow—substantial dead code. The modernization team focused on the actively used 45%, approximately 1.2 million lines.

They adopted a phased approach, modernizing and migrating one product line at a time. Home insurance migrated first as a pilot, followed by auto, then life insurance products. Each phase included three months of parallel operation where both systems processed transactions. Data reconciliation processes validated that both systems produced identical results before cutover.

The organization realized 40% reduction in infrastructure costs within the first year, eliminated mainframe hardware refresh expenses, and reduced the average time to deploy new features from six months to three weeks. Developer productivity increased as new hires could contribute productively within months rather than years required for COBOL competency.

Federal Government Agency: A large federal agency modernized benefits processing systems serving millions of citizens. Rather than big-bang replacement, they adopted API encapsulation as an interim strategy while planning comprehensive modernization. Using IBM z/OS Connect, they exposed mainframe transactions as REST APIs, enabling development of modern web and mobile interfaces while maintaining proven business logic.

This approach delivered citizen-facing improvements within 18 months—a timeline impossible with complete rewrite. The API layer provides an abstraction boundary for gradual backend modernization. As new services are built, they implement APIs previously backed by mainframe calls. Frontend applications remain unchanged as backend implementation shifts.

Regional Bank Core Banking Transformation: A mid-sized regional bank replaced its custom COBOL core banking system with a commercial banking platform from a major vendor. The 25-year-old system had become a constraint—new products took 18-24 months to implement, and integration with fintech partners proved nearly impossible.

The transformation took four years and cost $45 million—significantly over original estimates. Data migration proved more complex than anticipated, requiring extensive cleansing of decades of accumulated inconsistencies. The organization discovered undocumented business rules embedded in COBOL that the commercial package didn't replicate, forcing either custom extensions or business process changes.

Despite challenges, the bank now launches new products in weeks, integrates readily with third-party services, and has significantly improved mobile banking capabilities. The commercial vendor handles regulatory updates and security patches, freeing IT staff for customer-facing innovation. This case demonstrates both the challenges and ultimate value of comprehensive modernization.

Realistic expectations about investment required and timeline to value realization are essential for securing organizational commitment and planning appropriately.

Modernization Timeline Expectations:

Timeline estimates assume organizations have clear modernization strategies, executive commitment, adequate budgets, and skilled teams. Projects lacking these frequently stall or fail.

Beyond direct technology and service costs, organizations must budget for multiple investment categories:

Comprehensive Cost Components:

Total program costs for mid-sized enterprises typically range from $3-15 million, while large, complex estates can require $20-100+ million investments over multi-year periods. These figures represent substantial commitments but must be weighed against the mounting costs and risks of maintaining legacy systems indefinitely.

Underestimating Assessment Requirements: Organizations eager to start modernization often rush assessment, discovering critical dependencies mid-project that force expensive rework. Mitigation strategy: Invest thoroughly in assessment before committing to approaches. The 10-15% of budget spent on comprehensive analysis prevents costly mid-course corrections and provides the foundation for realistic planning.

Inadequate Testing: Incomplete testing causes production defects that erode trust and business value, potentially forcing expensive emergency fixes or even rollbacks. Mitigation strategy: Budget 30-40% of project effort for testing activities. Automate regression tests extensively. Establish clear acceptance criteria before beginning development. Involve business users in validation throughout the process, not just at the end.

Data Migration Complexity: Data problems derail projects that successfully transform code, causing business disruption when incorrect or incomplete data reaches production. Mitigation strategy: Start data analysis early in the project. Build comprehensive data validation frameworks. Plan for data cleansing activities. Test migrations with production-scale volumes. Execute multiple dry runs before actual cutover to identify and resolve issues in controlled environments.

Skill Gaps: Organizations may lack cloud expertise, modern development capabilities, or change management skills needed for successful transformation. Mitigation strategy: Invest in training and coaching programs that build internal capabilities. Engage experienced partners specifically for knowledge transfer, not just execution. Build Centers of Excellence to develop and disseminate expertise. Don't rely entirely on consultants—ensure knowledge remains after they leave through structured knowledge transfer programs.

Scope Creep: Modernization projects tempt organizations to add new features or address adjacent problems, expanding scope and timeline unpredictably. Mitigation strategy: Maintain strict scope discipline throughout the project. Achieve functional equivalence first as the primary objective. Plan future enhancements for separate phases after successful migration. Use formal change control processes to evaluate and approve any scope modifications.

Inadequate Executive Sponsorship: Modernization requires sustained commitment through inevitable challenges, resource constraints, and competing priorities. Mitigation strategy: Secure C-level sponsorship before beginning. Establish steering committees with business and IT leadership representation. Communicate progress and challenges regularly through multiple channels. Frame modernization as business transformation enabling strategic objectives, not merely IT projects.

Organizations can choose from various commercial and open-source tools that accelerate and de-risk modernization efforts.

AWS Mainframe Modernization: Offers comprehensive services including automated refactoring that transforms COBOL to Java using Blu Age technology, plus managed runtime for rehosted applications using Micro Focus Enterprise Server. The fully managed service handles infrastructure provisioning, scaling, and operations. Organizations benefit from deep integration with AWS services for databases, analytics, and AI/ML capabilities that enable sophisticated modern architectures.

Azure Mainframe Migration: Provides assessment tools, migration partners, and runtime environments for modernized mainframes. Strong integration with Microsoft ecosystems—Active Directory, .NET frameworks, SQL Server—creates synergy for organizations invested in Microsoft technologies. Azure Site Recovery provides disaster recovery capabilities exceeding typical mainframe implementations.

Google Cloud: While having fewer mainframe-specific offerings, Google Cloud provides strong fundamentals including partnerships with migration tool vendors and exceptional data analytics capabilities through BigQuery and other services that excel at analyzing modernized mainframe data.

Micro Focus: One of the dominant players in COBOL modernization, Micro Focus offers Enterprise Server for rehosting COBOL applications to x86 or cloud platforms, Visual COBOL for modern COBOL development, and analysis tools for understanding legacy estates. Their solutions enable COBOL to run in containers, integrate with .NET applications, and leverage modern development practices while maintaining the COBOL language.

IBM Z Modernization: Provides extensive mainframe modernization capabilities including z/OS Connect for API enablement, Wazi as a Service for cloud-based mainframe development, and Application Discovery and Delivery Intelligence for analyzing legacy applications. IBM's tools focus on gradual modernization that preserves mainframe investments while incrementally adopting cloud capabilities.

Specialized Transformation Vendors: Heirloom Computing specializes in automated transformation to Java or C# with cloud deployment. TSRI offers automated transformation services converting COBOL, JCL, and mainframe databases to Java and modern infrastructure. LzLabs provides a managed software container approach enabling mainframe applications to run unchanged on Linux servers. Blu Age, now part of AWS, pioneered automated COBOL-to-Java transformation with emphasis on microservices-based applications.

Red Hat OpenShift provides enterprise Kubernetes with additional developer tools, security hardening, and operational capabilities. OpenShift supports containerized COBOL applications and offers strong hybrid cloud capabilities for gradual migration strategies, enabling organizations to run workloads consistently across on-premises and cloud infrastructure.

Modernization creates both opportunities and challenges for security and compliance. While cloud platforms offer sophisticated security capabilities exceeding typical mainframe implementations, the migration process itself introduces risk that requires careful management.

Cloud platforms enable security improvements difficult or impossible in mainframe environments. Zero Trust architectures replace perimeter-based security models, assuming no implicit trust regardless of network location. Modern identity and access management systems provide granular authorization, multi-factor authentication, and comprehensive audit logging that tracks every access attempt.

NIST cybersecurity frameworks like the NIST Cybersecurity Framework and NIST 800-53 find easier implementation in cloud environments that provide extensive logging, monitoring, and control capabilities as standard features. Encryption capabilities extend beyond traditional database-level encryption—cloud platforms offer encryption at rest for storage, encryption in transit for network communications, and even encryption in use using confidential computing technologies. Key management services provide centralized cryptographic key lifecycle management with hardware security module backing.

Continuous security monitoring and automated remediation replace periodic security assessments. Cloud Security Posture Management tools continuously evaluate configurations against security best practices and compliance frameworks, automatically remediating violations or alerting security teams. This represents substantial improvement over mainframe security audits conducted quarterly or annually with significant lag between vulnerability identification and remediation.

Regulated industries face specific compliance requirements that modernization must address. SOC 2 Type II certifications require documented controls around security, availability, processing integrity, confidentiality, and privacy. Cloud platforms provide compliance artifacts and shared responsibility models that clarify which controls the cloud provider manages versus which the customer must implement.

PCI DSS compliance for payment card processing requires specific security controls around cardholder data. Cloud platforms offer PCI-compliant infrastructure, but applications must be architected correctly to maintain compliance. Modernization provides opportunities to improve cardholder data handling, implement network segmentation more effectively, and enhance logging and monitoring beyond what was practical in mainframe environments.

GDPR and data privacy regulations mandate specific data handling practices including data subject rights, breach notification, and data minimization. Modernization enables better data governance—knowing where personal data resides, how it's processed, and who accesses it. Cloud platforms provide geographic deployment controls ensuring data residency requirements are met.

The migration itself may trigger compliance reviews, particularly when moving data across jurisdictions or changing data storage mechanisms. Modernization projects should include compliance experts from inception to ensure new architectures meet all applicable requirements and that the transition maintains continuous compliance without gaps.

The mainframe modernization landscape continues evolving rapidly. Understanding emerging trends helps organizations make future-proof architectural decisions.

Artificial intelligence and machine learning are transforming legacy modernization approaches. Large language models trained on vast codebases can understand COBOL syntax, infer business logic intent, and generate modern equivalents with increasing sophistication. These models go beyond simple syntactic transformation to understand semantic meaning, potentially producing better refactored code than traditional automated tools.

AI-powered code analysis identifies technical debt, security vulnerabilities, and optimization opportunities that human reviewers might miss across millions of lines of code. Machine learning models trained on code examples recognize anti-patterns and suggest architectural improvements. Generative AI can produce comprehensive documentation for undocumented legacy code, extracting business logic descriptions that accelerate modernization planning.

Organizations should expect AI-assisted modernization tools to become standard within 2-3 years, significantly reducing transformation costs and timelines. However, human oversight remains critical—AI can produce confident but incorrect transformations that require expert review.

The dichotomy between "mainframe" and "cloud" continues blurring. Hybrid architectures that maintain certain processing on Z hardware while leveraging cloud for others become increasingly sophisticated. IBM's hybrid cloud strategy, built around Red Hat OpenShift, enables containers to run seamlessly across mainframe and cloud infrastructure.

Organizations may choose to maintain critical transactions on Z hardware optimized for OLTP workloads while moving analytics, machine learning, and less-critical applications to public cloud. This nuanced approach recognizes that wholesale mainframe abandonment may not optimize for business needs. Mainframe-as-a-Service offerings from cloud providers and specialized vendors enable organizations to access mainframe capacity without capital investments.

Environmental considerations increasingly influence technology decisions. Cloud computing's energy efficiency typically exceeds on-premises data centers through economy of scale and renewable energy investments. Mainframe modernization often reduces carbon footprint by eliminating dedicated data center infrastructure with specialized cooling requirements.

Organizations are beginning to incorporate sustainability metrics into modernization business cases. Total Cost of Ownership now includes environmental impact alongside financial costs. Cloud providers' transparency around carbon emissions enables informed decisions that balance business requirements with environmental responsibility.

The journey from mainframe-bound COBOL applications to modern, cloud-native architectures represents one of the most significant technology transformations enterprises face. While the challenges are substantial—technical complexity, organizational change, substantial investment, and inherent risk—the imperative for modernization grows stronger as digital transformation accelerates and the talent crisis deepens.

Organizations that successfully navigate this transformation realize profound benefits extending far beyond cost reduction. Architectural agility enables rapid response to market opportunities and competitive threats. Scalability supports business growth without infrastructure constraints. Reduced costs free capital for innovation investments. Improved maintainability through modern languages and practices ensures long-term sustainability. Enhanced security and compliance capabilities reduce risk while enabling new business models. Better developer productivity and satisfaction improve recruitment and retention in competitive talent markets.

No single modernization approach suits all situations. Organizations must carefully evaluate their specific circumstances—application criticality, complexity, regulatory requirements, risk tolerance, available budget, internal capabilities, and timeline constraints—to select appropriate strategies. A large enterprise portfolio likely requires multiple approaches: API enablement for certain systems providing quick wins, refactoring for core applications requiring long-term support, replatforming for some workloads balancing risk and reward, and replacement with commercial packages for commodity functions that don't differentiate the business.

Success factors transcend technical execution. Executive sponsorship provides sustained commitment through inevitable challenges and resource competition. Comprehensive planning including thorough assessment reduces expensive mid-course corrections and builds realistic expectations. Investment in people through training, coaching, and organizational change management ensures teams can support modernized environments effectively. Phased approaches that deliver incremental value maintain momentum and allow learning from early phases to inform later work.

Organizations should resist the temptation to delay action. The talent crisis worsens daily as COBOL programmers retire without replacement. Technical debt accumulates, making eventual modernization more expensive and risky. Competitors gain advantages through modern architectures that enable faster innovation and better customer experiences. The regulatory environment grows more complex, straining systems designed decades ago. While modernization seems daunting, inaction carries greater long-term risk to business continuity and competitive positioning.

Start with small pilot projects to build confidence and develop internal capabilities without betting the entire enterprise on unproven approaches. Choose less critical applications for initial modernization to learn lessons applicable to core systems. Build Centers of Excellence that develop deep expertise in modernization practices and disseminate knowledge across the organization. Engage experienced partners for knowledge transfer while consciously developing internal competency that persists beyond consultant engagements.

The COBOL to cloud transformation represents not just a technology migration but a fundamental reimagining of how organizations leverage information technology for business value. Those who approach it strategically with clear objectives, invest appropriately in both technology and people, and execute thoughtfully with proper risk management will emerge with competitive advantages that compound over years. Those who defer action face increasingly precarious positions as both technology and talent landscapes shift beneath them.

The modernization journey is long, complex, and sometimes frustrating. Success requires persistence through setbacks, flexibility to adjust approaches when reality diverges from plans, and sustained commitment when results take time to materialize. But the destination—applications that are agile, scalable, maintainable, secure, and positioned to leverage emerging technologies—makes the journey worthwhile. Organizations worldwide are successfully navigating this transformation, proving that with proper planning, realistic expectations, and sustained commitment, legacy modernization can deliver transformative business value. With the frameworks, strategies, and lessons detailed in this guide, your organization can join them in successfully transitioning from legacy COBOL systems to modern cloud architectures that power the next decade of business growth and innovation.

23.01.2024

23.01.2024

23.01.2024

23.01.2024

23.01.2024